Artificial Intelligence (AI) has long captured the human imagination—from Isaac Asimov’s fictional robots to real-world breakthroughs that are transforming industries and society. But the journey of AI from its symbolic beginnings to today’s generative giants has been anything but linear. It’s a story marked by waves of optimism, wintery setbacks, paradigm shifts, and now, unprecedented momentum.

In this post, we’ll explore the evolution of AI, tracing its journey through key eras and technologies, before diving into the trends shaping its future—from autonomous agents and edge AI to the pursuit of artificial general intelligence (AGI).

1. The Origins: Symbolic AI and Rule-Based Systems (1950s–1980s)

The roots of AI go back to the mid-20th century, inspired by the notion that human reasoning could be replicated by machines. Early AI systems were largely symbolic—they used logic, formal rules, and structured knowledge bases.

🔹 Key Milestones:

- 1950: Alan Turing proposes the Turing Test, a benchmark for machine intelligence.

- 1956: The term Artificial Intelligence is coined at the Dartmouth Conference, marking the birth of AI as a field.

- 1960s–70s: Development of expert systems like DENDRAL (chemistry) and MYCIN (medical diagnosis) that used IF-THEN rules to emulate decision-making.

🧠 How it Worked:

Symbolic AI systems operated under Good Old-Fashioned AI (GOFAI), where intelligence was seen as symbol manipulation. These systems required extensive human knowledge engineering—experts needed to codify every rule the machine would follow.

❄️ Limitations:

- Couldn’t deal with ambiguity or incomplete information.

- Struggled with learning or adapting to new data.

- High maintenance and narrow domains.

This led to the AI Winter—a period of reduced funding and interest in AI due to unmet expectations.

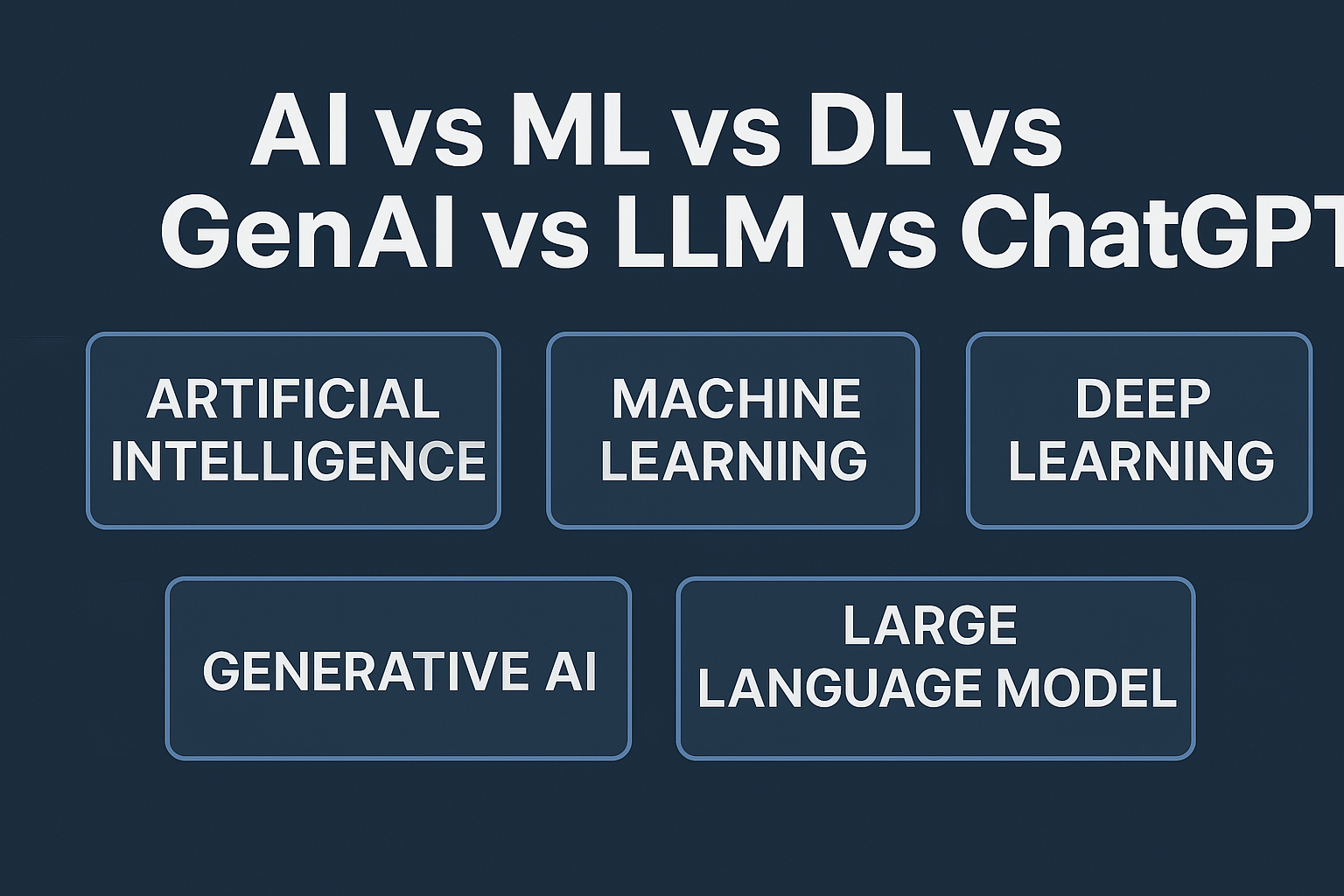

2. The Rise of Machine Learning (1990s–2010s)

The AI landscape began to shift with the rise of machine learning (ML)—a new approach that allowed machines to learn from data rather than rely on hard-coded rules.

🔹 Key Milestones:

- 1986: Revival of neural networks with backpropagation, enabling multi-layered learning.

- 1997: IBM’s Deep Blue defeats world chess champion Garry Kasparov.

- 2006: Geoffrey Hinton popularizes deep learning, which sets the stage for modern neural networks.

📊 How it Worked:

ML algorithms learn patterns from data through training. Instead of being explicitly programmed, they improve performance by optimizing for accuracy on tasks like classification, regression, or clustering.

💡 Breakthroughs:

- Image recognition with convolutional neural networks (CNNs).

- Speech recognition and machine translation using recurrent neural networks (RNNs).

- Big data and cloud computing made large-scale training feasible.

3. The Era of Deep Learning and Large Language Models (2010s–2020s)

The current decade has seen AI models scale in both capability and size—culminating in large language models (LLMs) like GPT, BERT, and others. These models are trained on massive corpora of text and have demonstrated astonishing language understanding and generation capabilities.

🔹 Key Milestones:

- 2012: AlexNet wins ImageNet, reigniting interest in deep learning.

- 2018: Google’s BERT revolutionizes natural language understanding.

- 2020–Present: OpenAI releases GPT-3, then ChatGPT, showcasing the power of transformers and unsupervised learning.

🧠 How LLMs Work:

LLMs are based on the transformer architecture, which enables parallel processing of sequences and attention mechanisms that capture contextual relationships between words. Trained on billions of parameters, these models can generate human-like text, write code, answer questions, and much more.

📈 Industry Impact:

- Customer service chatbots and virtual assistants.

- Automated content generation.

- AI coding assistants and research summarization tools.

- Democratization of creativity and knowledge access.

4. The Future of AI: Emerging Trends and the Road to AGI

AI is evolving rapidly, with new frontiers on the horizon. Here are some of the most exciting and transformative trends shaping its future:

🔮 1. Generative AI

Beyond text, generative models are creating images, videos, music, and 3D models.

- Examples: DALL·E (images), Synthesia (videos), Runway (film editing).

- Implications: Creative industries are being redefined, with AI acting as a co-creator.

🤖 2. Autonomous Agents

AI agents can now autonomously plan, reason, and execute tasks across applications and environments.

- AutoGPT and BabyAGI showcase early forms of recursive AI agents.

- Future applications include personal AI assistants, research agents, and autonomous business operations.

🌐 3. Edge AI

AI models are moving closer to the source of data—on devices like smartphones, sensors, and robots.

- Why it matters: Faster inference, reduced latency, increased privacy, and offline capabilities.

- Use cases: Smart home devices, autonomous vehicles, medical wearables.

🧠 4. Toward Artificial General Intelligence (AGI)

The holy grail of AI is AGI—machines that possess general cognitive abilities across domains, akin to human intelligence.

- AGI aims to transcend narrow, task-specific intelligence.

- Experts differ on timelines—some predict decades, others suggest sooner.

- Key challenges include reasoning, self-awareness, long-term memory, and ethical alignment.

AI’s Broader Impact on Society and Industry

The ripple effects of AI are vast and growing.

🔧 Industry Applications:

- Healthcare: AI in diagnostics, drug discovery, and personalized treatment.

- Finance: Fraud detection, algorithmic trading, and risk analysis.

- Education: Personalized tutoring, grading automation, and content recommendation.

- Logistics: Demand forecasting, route optimization, and autonomous delivery.

⚖️ Societal Considerations:

- Ethics & Bias: Ensuring fairness and accountability in AI systems.

- Employment: Reskilling the workforce amid automation.

- Regulation: Governments are grappling with how to regulate AI without stifling innovation.

- Safety: Ensuring alignment of superintelligent systems with human values.

Final Thoughts

From rule-based reasoning to self-learning neural networks, the story of AI is a testament to human curiosity and technological ingenuity. We’re now in a pivotal era where machines not only support our work but also partner with us in creativity, decision-making, and exploration.

As we move toward a future of increasingly intelligent systems, the questions become more profound: What kind of intelligence are we building? How do we ensure it benefits all of humanity? And are we prepared for the possibilities—both wondrous and worrisome—that lie ahead?

The journey of AI is far from over. In many ways, it’s just beginning.